Document Fraud

How is AI changing document fraud? We analyzed millions of documents and surveyed 90 practitioners to find out.

Documents flagged as fraudulent

Increase in AI-generated document fraud

Fraud leaders concerned about AI-enabled fraud

Brianna Valleskey

January 20, 2026

Introduction

Fraudsters have always sought to exploit the gap between what a document claims and what is actually true.

In the 1920s, Victor Lustig used fake documents to pose as a government official and convince scrap metal dealers to purchase the Eiffel Tower (twice). What's different 100 years later is the scale and speed at which that exploitation can happen.

Today, generative AI can help produce a realistic pay stub in seconds. Template marketplaces sell editable bank statements for under ten dollars. A fraudster with no technical skills can purchase, customize, and submit convincing documentation without ever touching Photoshop.

And it is working. Inscribe flagged approximately 6% of all documents processed across our network as fraudulent last year. That is roughly one in sixteen documents showing signs of manipulation, fabrication, or misrepresentation.

When I started in fraud back in the 1990s, there were really only two types: credit card fraud and check fraud. As banks released more products, fraud got more complicated, but the tech also improved pretty dramatically.

This report synthesizes what we learned in 2025 from three sources: detection data from the Inscribe network spanning millions of documents across banks, credit unions, fintechs, and lenders; a survey of 90 fraud and risk leaders conducted in November and December 2025; and interviews with practitioners including senior underwriters, chief risk officers, fraud managers, and industry experts.

The findings point in a clear direction. Document fraud is accelerating. Manual review is reaching its limits. And organizations that adapt will pull ahead of those that do not.

But this is an evolution, not defeat. The same AI capabilities fraudsters use to create convincing fakes can be deployed to detect them. The fraud fighters we interviewed are not discouraged. They are adopting new tools, sharing intelligence across institutions, and rethinking workflows that have not changed in decades.

What follows is organized into four sections:

By the Numbers

The AI Arms Race

The Cost of Inaction

Winning Strategies

This report is designed to help fraud fighters and risk leaders understand the landscape, benchmark your approach, and identify opportunities to strengthen your defenses.

Let's start with what the data tells us.

01

By the Numbers

Every fraud strategy starts with understanding the threat. This section draws on Inscribe's proprietary network data spanning millions of documents processed across hundreds of financial institutions, combined with survey responses from 90 fraud and risk leaders.

Together, they reveal where document fraud is concentrated, which document types carry the highest risk, and where fraudsters are finding soft spots in verification workflows.

The scale and shape of document fraud heading into 2026

In 2025, approximately 6% of all documents processed were flagged as fraudulent. That translates to roughly one in sixteen documents showing indicators of manipulation, fabrication, or misrepresentation.

To put that in context: if an organization processes 10,000 loan applications per year and each application includes three documents, it's potentially looking at over 1,800 fraudulent documents annually.

And for a fraud team reviewing 500 applications a week, that translates to roughly 30 flagged documents requiring investigation every week. With some weeks spiking higher, plus holidays and promotional periods compound the volume, the question becomes whether a manual review process can keep up.

The volume is relentless for investigators who work these cases daily. Las Vegas Financial Crimes Detective Mark Evans spent six years in financial crimes before shifting to cyber crimes, and document fraud was a constant.

I would see document fraud every single day. Fake DMV temporary passes, DMV titles, fake treasury bonds that were completely made from scratch. I actually caught a guy one time who was in the middle of making a fake treasury check, and it was still up on his computer screen in Photoshop.

The cases Evans describes are not outliers. They reflect the industrialization of document fraud that our network data confirms.

This tracks with what we have heard across the industry. Frank McKenna, Chief Fraud Strategist at Point Predictive and author of the Frank on Fraud blog, has watched fraud evolve for three decades. He describes fraudsters as increasingly sophisticated in exploiting multiple channels and document types simultaneously.

As document quality improves, the challenge shifts from just a detection problem to an operational burden. When fraudulent and legitimate documents are indistinguishable at first glance, review teams face higher workloads, longer queues, and increased risk of both missed fraud and unnecessary friction for good customers.

Fraud pressure is broadly distributed across document types

When we examine fraud rates by document type, a clear pattern emerges: most documents used to verify critical facts exhibit a similar baseline fraud rate, generally in the 4–7% range.

Bank statements, pay stubs, tax forms, business filings, and other financial documents are all consistently targeted. This indicates that document fraud is not confined to a single document class, nor driven by one specific verification step. Instead, fraud pressure is broadly distributed across workflows wherever documents are used to establish trust.

Utility bills show a notably higher fraud rate than other document categories in Inscribe’s network, but this doesn't mean utility bills are uniquely dangerous. Instead, they sit at the intersection of identity, convenience, and perception.

Utility bills are commonly used to verify proof of address, often as a secondary or supporting document. Because an address underpins many downstream decisions, from account opening to credit approval, utility bills frequently serve as an early anchor in identity formation.

At the same time, altering a utility bill often feels less serious than altering a primary financial document, even though the legal and risk implications are the same. In many cases, the behavior is not overtly malicious. Applicants may have recently moved, lack updated documentation, or “fix” an address without recognizing the action as deception.

The intent varies, but the risk signal does not. This perception gap creates a training opportunity. If reviewers unconsciously apply less scrutiny to "secondary" documents, they may be overlooking exactly the documents that warrant more attention.

Applying consistent scrutiny across all documents used to verify critical facts helps protect institutions and customers, especially when individuals may unknowingly cross a line.

Financial details are edited in most documents

To understand what fraudsters are actually changing, we categorized every flagged document by whether the edits targeted identity information, financial details, or both.

In 2025, Inscribe observed the following breakdown across altered documents:

Historically, these categories tended to stay within a relatively narrow range year over year. In 2025, however, that pattern shifted. The share of documents involving both identity and financial manipulation increased sharply (from 40.2% of documents in 2024 to 59.8% of documents in 2025), while identity-only edits remained a small minority.

While our data shows most (91.2%) documents show edits to financial details, these patterns cannot be cleanly mapped to a single fraud type. A document with both identity and financial edits could represent sophisticated first-party fraud, third-party misuse, or synthetic identities supported by fabricated documentation.

As Laura Spiekerman, Co-founder and President of Alloy, noted:

In some cases what appears to be first-party fraud is actually synthetic fraud. And a lot of that is also hybrid. Some details may be real, some details may be fake. The AI makes this faster and easier to do at scale.

Fraud can be hard to categorize, and it’s increasingly hard to categorize. There are blurred lines now between categories that don’t fit as neatly into the buckets we designed a decade ago.

Laura Spiekerman — Co-Founder & President, Alloy

This growing ambiguity helps explain why first-party fraud and related behaviors often go underreported. Many organizations hesitate to classify financial manipulation as fraud when the applicant appears legitimate, intent is unclear, or identity checks pass.

Michael Coomer, Director of Fraud Management at BHG Financial, notes that this hesitation can obscure the true scope of the issue:

There is often an unwillingness to acknowledge that this behavior exists within your customer base. Reframing what we consider a ‘good customer’ is uncomfortable, but necessary.

Michael Coomer — Director of Fraud Management, BHG Financial

The takeaway is not that fraud attribution must be perfect. Rather, it is that financial manipulation cuts across first-party, third-party, and synthetic identity fraud, and detection strategies that rely primarily on identity signals or a single verification step will miss meaningful risk.

As fraud categories blur, effective prevention depends on layered, lifecycle-based controls that evaluate financial consistency alongside identity, behavior, and context. Organizations that focus solely on onboarding checks or static identity verification are increasingly exposed as fraud tactics evolve.

Bank statements top the list of fraud fighter concern

In a survey of 90 fraud and risk leaders, 85.6% of respondents cited bank statements as the document type they are most concerned about, the highest of any category.

The challenge with bank statements is their complexity. Unlike a pay stub with a handful of fields, a bank statement contains dozens of transactions, running balances, dates, and formatting elements. That complexity creates more opportunities for subtle manipulation and more work for reviewers trying to catch it.

Bank statements, especially, are very complicated because we have to parse transactions. We had developed a system in terms of checking, ‘Do you see a transaction from Fleetio?’ which is software that our legitimate customers use. That was our way of analyzing these bank statements from a fraud and credit perspective. It was heavily manual, everything used to take an hour.

Anurag Puranik — Chief Risk Officer, Coast

Pay stubs and business financial documents rank second and third, reflecting the income verification use case that drives much of document fraud in lending.

For fraud teams, this means the documents requiring the most scrutiny are also the ones that appear most frequently. There's no low-volume segment to deprioritize — the pressure is constant across the documents that matter most.

By the Numbers

Document fraud operates at scale

~6% of documents flagged across Inscribe’s network, making fraud an operational reality, not an edge case.

Fraud pressure is widespread

Most document types show similar baseline fraud rates. No single class is immune.

Financial manipulation dominates

Over 90% of flagged documents include altered financial details, either alone or alongside identity changes.

Bank statements are the top concern

85.56% of risk leaders cite bank statements as the most vulnerable document type.

The data shows where fraud pressure exists today, but it doesn't explain why this moment feels different to the fraud fighters living it. For that, we need to examine what's changed: the tools.

02

The AI Arms Race

How generative AI is reshaping document fraud on both sides

The previous section showed where document fraud is concentrated: bank statements, pay stubs, financial documents across the board. But the data doesn't explain why this moment feels different to the fraud fighters living it.

What makes AI document fraud difference is speed (and as a result, volume). Fraudsters can now produce hundreds of variations in minutes instead of hours, and the barrier to entry has collapsed.

This section examines how AI is reshaping document fraud from both sides, and why the organizations that move fastest will define the next era of fraud prevention.

AI-generated document fraud is an emerging threat

Across Inscribe's network in 2025, AI-generated document fraud comprised less than 5% of the total fraudulent documents we detected. The majority of document fraud is still template-based (which we'll cover later in this section), but based on the data observed we do feel this is an emerging threat.

What's worth noting is that the monthly volume of AI-generated document fraud increased nearly fivefold between April and December 2025. This growth was not linear. Activity rose sharply through early summer, dipped in August and September, then accelerated again into the fall.

Several factors likely contributed to this pattern. Seasonality plays a role, as overall document volume typically declines during late summer. At the same time, changes in how generative tools produced document metadata temporarily reduced detection coverage, before new signals and detectors were introduced later in the year.

We'll be closely monitoring and providing updates on this trend in 2026, as we expect LLMS and generative AI models to improve alongside more widespread fraudster adoption.

AI document fraud is a near-universal concern

This acceleration is reflected in how fraud and risk teams view the threat: In a survey of 90 fraud and risk leaders, 97.8% expressed concern about AI-enabled document fraud.

The concern is justified, but it's worth distinguishing between current reality and future risk.

Today, many AI-generated documents are often unconvincing under close inspection. The immediate challenge is the volume of attempts, not their sophistication.

But the quality is improving rapidly. Earlier this year, we published research showing how AI-generated receipts and documents are becoming harder to distinguish from legitimate ones. Detection strategies that rely on visual inspection are becoming less reliable with each generation of tools.

The documents are just so much better looking now than they used to be. You can't necessarily tell if the spacing is off because thanks to AI and some of these other tools, the documents are just so much better looking now.

Timothy O'Rear — Senior Underwriter, Rapid Finance

This shift does not mean document fraud has become invisible, but it does mean that surface-level review is no longer sufficient. As visual quality improves, meaningful signals move deeper into the document: transaction consistency, metadata artifacts, internal logic, and cross-document patterns.

The implication for fraud teams is clear. Detection strategies that depend primarily on appearance or manual inspection are increasingly fragile in an AI-assisted environment.

Ten years ago, you could spot a fake passport or a fake driver's license that was sent in. It looked too digitally perfect or it was a bad Photoshop job. A lot of the phishing emails and phishing texts were easy to spot ten years ago as well. There were major quality issues, grammar, spelling errors, just low quality logos. But we're going to see that quality go up with AI.

Angela's point captures the trajectory. Today, many AI-generated documents still have visible flaws. But the gap is closing. Detection strategies that depend primarily on appearance or manual inspection are increasingly fragile. The question is not whether AI-generated documents will become indistinguishable from real ones, but when.

Two types of AI-enabled document fraud

Not all AI-enabled document fraud looks the same. In practice, our team has found that it falls into two distinct patterns: documents generated entirely from scratch, and legitimate documents that have been selectively edited using generative tools.

- AI-generated documents are created entirely from scratch using image generation models. These documents never existed in legitimate form. A fraudster prompts an AI system to create a bank statement, pay stub, or utility bill, and the model produces something that looks convincing at first glance.

- AI-edited documents start as real documents that fraudsters modify using generative tools to change specific fields: names, dates, account numbers, transaction amounts. Because most of the document is genuine, these modifications are harder to catch.

Of these two patterns, AI-edited documents currently pose the greater risk. The base document is real, so it passes many surface-level checks. The edits are small and targeted. Detection requires looking beyond appearance to metadata, internal consistency, and cross-document patterns.

AI is also lowering the barrier to entry in quieter ways. Fraudsters do not always need to generate documents outright. Large language models (LLMs) can simply teach users how to alter documents, walking them through tools like Photoshop step by step.

This may be the most underappreciated impact of AI on document fraud. The flashy threat is AI-generated documents. The quieter threat is AI as a tutor. Someone who has never edited a PDF can now get step-by-step instructions in minutes. The skill barrier that once protected institutions has effectively disappeared.

I was playing around with Gemini's Nano and was shocked at how easy it is to change a document. All you do is put a simple, one sentence prompt into it and go change the name from this to this and it does it. AI is just a whole new level of fraud that's going to show up in documentation fraud.

Marc Evans — Las Vegas Financial Crimes Detective

AI fraud tactics used alongside document manipulation

AI-enabled fraud doesn't stop at the document. Increasingly, fraudulent documents are just one component of a broader scheme — supported by AI-generated scripts, fabricated business identities, and convincing narratives that hold up under verification.

Michael Coomer, Director of Fraud Management at BHG Financial, emphasizes that the risk is not limited to individual documents. AI is increasingly used to support fraud end to end, making interactions, identities, and supporting materials appear more legitimate.

Fraudsters are using AI to support their interactions with victims, particularly in a scam environment, where they are creating more realistic identities and more realistic stories.

Michael Coomer — Director of Fraud Management, BHG Financial

In this context, documents are not always the primary attack vector. They are often the reinforcing evidence used to validate a story that has already been made convincing through AI-assisted scripting, coaching, and narrative construction.

This matters because document fraud does not happen in isolation. It is typically part of a broader scheme that includes identity fraud, application fraud, and social engineering. And AI is making all of those components more convincing.

What I have noticed recently is when we call people for verification, the signal has just vanished. Fraudsters can type into ChatGPT and say 'I am a business running this and give me a script to talk to a customer service agent' and you can have a very detailed nice script that makes you sound really legitimate and knowledgeable. Our mind was blown.

Anurag Puranik — Chief Risk Officer, Coast

This erosion of traditional signals extends beyond phone calls. Fraudsters are using AI to:

- Generate realistic business websites in minutes using no-code tools

- Create professional-looking email domains that mimic legitimate businesses

- Produce consistent narratives across application forms, documents, and verbal verification

- Research and incorporate industry-specific terminology that makes their stories more believable

Patrick Lord at Rapid Finance has seen fraudsters get creative with web presence in ways that are easy to overlook.

I can go buy ‘ABC Solutionss’ with two S's at the end. And you either have it forwarded or it looks similar enough to ‘ABC Solutions’ with one S at the end. It can be really easy to overlook something like that. You have to make sure there's the proper checks that go into it. You're actually checking out that domain and maybe having some automated checks and occasional human checks behind it.

Patrick Lord — Senior Project Manager, Rapid Finance

The implication is that document verification cannot operate in a silo. A convincing document submitted alongside a convincing website, a convincing phone presence, and a convincing application creates a reinforcing web of apparent legitimacy. Detection systems need to evaluate documents in context, not in isolation.

Jen Lamont, BSA Compliance Officer and Fraud Manager at America's Credit Union, emphasizes the importance of looking beyond the surface.

We're making sure that the phone number is tied to the proper member, to the proper business. We're making sure that the emails seem to make sense. Sometimes we'll get emails that appear to belong to someone else. We're doing Google searches, making sure that we can find the business if it's applicable on Google. Restaurants, clothing stores, that sort of thing, those kinds of businesses should have a web presence.

Jen Lamont — BSA Compliance Officer & Fraud Manager, America's Credit Union

Document template store economy remains high-volume threat

Despite advances in generative AI, the path of least resistance for most fraudsters remains surprisingly low-tech: purchasing pre-made document templates from online marketplaces.

In 2025, 1 out of every 5 flagged documents across Inscribe’s network showed signs of template-based manipulation, a sharp increase from 1 out of every 14 in 2024. This growth reflects not a shift toward more sophisticated fraud, but toward more efficient, repeatable fraud.

Furthermore, Inscribe's research into fraud-related Google search terms found significant volume for fraud-related keywords:

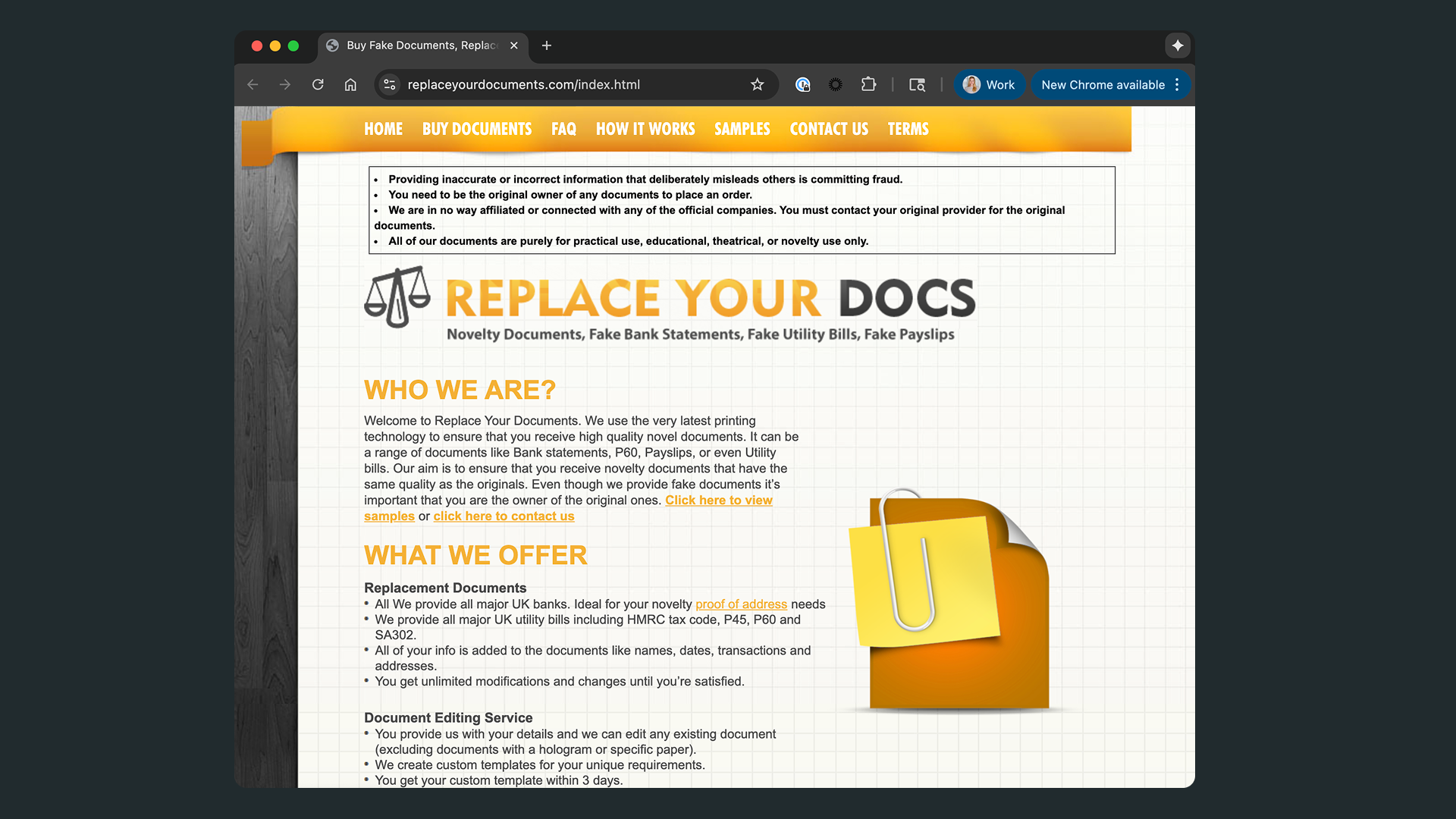

A growing ecosystem of websites now sells editable document templates (bank statements, pay stubs, utility bills, tax forms) for as little as $10. These sites operate openly on the public web, positioning themselves as providers of "novelty" or "replacement" documents while offering exactly what a fraudster needs.

One UK-based site, Replace Your Documents, illustrates the model. The homepage promises “high quality novel documents” using “the very latest printing technology.”

The product catalog includes:

- Bank statements from “all major UK banks”

- Utility bills including HMRC tax codes, P45s, P60s, and SA302s

- Payslips printed on “high quality Sage payslip paper” with figures “calculated based on your net/gross salary”

- Custom document editing where customers provide details and the site edits existing documents

- Custom templates built to order within 3 days

The site offers same-day delivery of digital PDFs, printed copies within 2-3 days, and “unlimited modifications and changes until you're satisfied.”

The economics are straightforward: A fraudster can purchase an editable bank statement template, customize it with a target's information, and submit it as part of a loan application. The investment is minimal. The potential return, if the loan is approved, is substantial.

Hailey Windham, Community Banking Lead at Sardine and host of Fraud Forward, points out that fraudsters will always take the easiest path available.

Fraudsters don’t want resistance. The moment you make fraud hard, expensive, or frustrating, they move on to someone else.”

Hailey Windham — Community Banking Lead, Sardine

This explains a pattern in our detection data: many fraudulent documents aren't sophisticated. Fraudsters test which templates pass verification, then scale what works. No Photoshop skills required; just a credit card and a few minutes to find the right marketplace.

The combination of template stores and AI editing tools has created hybrid fraud: documents that are neither fully synthetic nor simply purchased fakes. Catching them requires detection systems built for both.

The AI Arms Race

AI-generated document fraud is accelerating

Detected AI-generated document fraud increased nearly 5× from April to December 2025.

Concern is nearly universal and urgency is high

97.78% of fraud leaders report concern about AI-generated or AI-edited documents, and 65.56% of respondents say they are very concerned about AI-enabled document fraud.

Template-based fraud remains dominant by volume

In 2025, 1 in 5 flagged documents was template-based, up from 1 in 14 in 2024.

Document fraud demand is visible in search behavior

The strongest defenses combine AI-driven scale with experienced fraud fighters who can interpret context, intent, and edge cases.

Understanding the threat alone doesn't translate to action, especially when legacy processes feel "good enough." The previous section examined how AI is changing the fraud landscape; the next section looks at what happens when organizations do not adapt.

03

The Cost of Inaction

Why legacy approaches cost more than ever

In this section, we examine three dimensions of the cost of inaction: the operational burden of manual review, the competitive disadvantage of slow decision-making, and the compounding fraud losses of inaction.

Manual review breaks with scale

For many institutions, the breaking point is not a single catastrophic fraud event. It is the slow accumulation of inefficiencies that compound over time until the gap between what the team can handle and what the business requires becomes unsustainable.

The costs show up in operational bottlenecks, missed fraud categories, and customer attrition. They show up in fraud teams working until midnight to clear backlogs. They show up in good customers who take their business elsewhere because decisions take too long.

Before Inscribe, thousands of documents a day were reviewed by a human pair of eyes. I have memories of us being super busy some nights and staying up until midnight just trying to get through the documents manually.

Timothy O'Rear — Senior Underwriter, Rapid Finance

The time required for thorough manual review is substantial. Before implementing automated verification, some institutions reported spending 60 to 90 minutes per application on document review alone.

It used to take like an hour to one and a half hours just to do one customer. Most of them today are automated.

Anurag Puranik — Chief Risk Officer, Coast

Patrick Lord describes the manual processes that Rapid Finance used before adopting automated tools.

We would have these custom spreadsheets that we'd have to spot check some math. And we all had these workflows where we would open up all the three or four documents next to each other just compare the beginning and ending balances. And it was like this whole mental calculus that went on.

Patrick Lord — Senior Project Manager, Rapid Finance

Those workflows worked when fraudsters were using basic image editing tools. They don't work when AI ensures the math adds up, the formatting is consistent, and the metadata looks clean.

At 45 minutes per document and 200 daily applications, you're consuming 150 analyst-hours (the equivalent of nearly 20 full-time employees) just on document checks alone. That's a structural mismatch between how fraud scales and how manual review doesn't.

Slow decisions create competitive disadvantage

Document verification does not happen in a vacuum. It is one step in a customer journey that includes application, underwriting, approval, and funding. When that step takes too long, the entire journey suffers.

For lenders especially, speed matters. Good customers have options. If your process takes days while a competitor takes hours, you will lose business.

The big selling point for a lot of our financing is that we can get the money into clients' accounts quickly within 24 to 48 hours. Well, if you're taking 24 to 48 hours to even look at their bank statements before you can even get a quote out to them, you're basically guaranteed to lose that client.

This dynamic creates adverse selection. The customers who are willing to wait are often the ones who have no other options. The best customers, the ones with strong credit and legitimate documentation, go elsewhere.

Coast has built their process around speed, with a goal of deciding 90% of applications the same day they are submitted.

If somebody exploits a loophole in your system, all the fraudsters in the world are instantly going to know about it. And they're all attacking you within a matter of days to weeks, not months. Your time to reaction has to be really fast. I have known stories of people losing millions of dollars in a matter of a week where something very obvious was just wrong.

Anurag Puranik — Chief Risk Officer, Coast

Coast's same-day SLA is possible because document verification is automated. If everything checks out, the application moves forward without human intervention. If something is flagged, a human reviewer steps in with all the context they need to make a quick decision.

The result is a customer experience that feels seamless ... but more importantly, a fraud operation that can adapt as fast as threats evolve. Good customers get approved quickly. Suspicious applications get the scrutiny they deserve. And the fraud team isn't buried in a backlog of routine reviews.

Jen Lamont sees speed as essential for serving members effectively.

It's so important for us to lean on automation and AI because our job, especially in the credit union space, is to help our members. One fraud situation can be a 45 minute conversation, an hour long conversation. If I don't have some automation on the back end in my system, how am I supposed to give my undivided attention to our members? They need the emotional support.

Jen Lamont — BSA Compliance Officer & Fraud Manager, America's Credit Union

When routine document checks are automated, fraud analysts can focus on what humans do best: having difficult conversations, supporting victims, and investigating complex cases that require judgment and empathy.

How inaction compounds into fraud losses

The cost of inaction in fraud prevention rarely shows up as a single, headline-grabbing loss. More often, it compounds quietly.

Consider a simplified example.

Assume an institution processes 10,000 applications per month. If 6% contain document fraud signals, that is 600 risky cases entering the funnel. If legacy controls miss even 10% of those cases, that is 60 fraudulent applications per month making it through.

Now layer in basic economics:

- If the average exposure per approved application is $25,000

- And only a fraction result in full loss, say 20%

- That is $300,000 in potential losses per month

- Or $3.6 million in fraud losses annually

That estimate does not include:

- Manual review costs

- Customer churn from slow decisions

- Downstream collections, disputes, or compliance overhead

- The second-order effect when fraud patterns are reused and scaled

In this context, fraud losses behave less like isolated incidents and more like interest-bearing debt; $3.6 million in fraud losses is roughly the fully-loaded cost of a 15-person fraud team. Organizations paying that figure in losses are funding fraud instead of prevention.

Fraud prevention can end up turning to revenue protection. When I look at a program like Inscribe, generally speaking, you catch one or two big deals of fraud that you wouldn't have caught, it pays for itself. Everything else after that, it's like you are protecting your revenue and growing it in a way where you can trust it.

Patrick Lord — Senior Project Manager, Rapid Finance

The cost of inaction is not just the fraud you miss. It is the compounding effect of a system that cannot keep up with the threats it faces.

The Cost of Inaction

Manual review breaks at scale

An hour of document review per application translates into dozens of analyst hours per day and millions in annual labor costs as volume grows.

Fraud losses compound quickly

Even modest miss rates create material exposure when fraud patterns are reused and scaled across hundreds of applications.

Reaction time is now a risk variable

In an AI-accelerated landscape, the window between vulnerability discovery and exploitation has compressed from months to days.

Hidden costs outweigh visible losses

Operational backlogs, reviewer burnout, and customer attrition often exceed direct fraud losses over time.

In the final section, we examine what the most effective fraud teams are doing differently: the human-AI partnership, layered defense strategies, and the power of industry collaboration.

04

Winning Strategies

What the most effective fraud teams are doing differently

The previous sections painted a challenging picture: document fraud is growing, AI is making fakes harder to detect, and manual processes cannot keep up. But the fraud fighters we interviewed are adapting.

This section examines what separates effective fraud programs from those that are falling behind. Three themes emerged consistently across our interviews and survey data: the importance of human-AI partnership, the necessity of layered defenses, and the power of industry collaboration.

These organizations are successfully managing document fraud at scale.

"We do need to be prepared for how fraudsters might utilize AI, but I think that we are much better served as fraud fighters focusing on how we can use AI to improve the foundational things that we have in place at our businesses, utilizing it to work smarter, not harder."

Angela Diaz — Senior Principal of Operational Risk Management, Discover

AI detection methods being adopted by fraud fighters

The same AI capabilities that make fraud easier to commit can also make it easier to detect. And the fraud fighters we spoke with are not waiting on the sidelines — they are actively adopting these tools.

The fraud fighters who have deployed AI for document fraud detection are seeing measurable results. BCU prevented $5.6 million in losses from confirmed altered documents in just the first nine months of 2025. Logix Federal Credit Union prevented $3 million in potential fraud losses in eight months. Kinecta saved $850,000 in fraud losses while reducing document review time by 99%.

Some of our largest dollar preventions in the past few years have come directly from Inscribe detections. We're talking millions in losses prevented, and that's made a measurable difference in how fast and how confidently we can stop fraud.

BCU's team has used layered detection to uncover fraud rings that would have been invisible to single-point analysis. In one case, they used Inscribe's X-Ray feature to connect multiple members tied to the same address, revealing that a document was originally owned by a blacklisted member with roughly $100,000 in charged-off loans.

In one case, a $75,000 auto loan was prevented after a bank statement was flagged for a fingerprint mismatch. That one signal changed the whole outcome of the case.

In one case, a $75,000 auto loan was prevented after a bank statement was flagged for a fingerprint mismatch. That one signal changed the whole outcome of the case.

Tyler Davenport — Investigator, Account Protection Team, BCU

This democratization of technical capability matters. Fraud teams have historically been constrained by their access to engineering resources. If an analyst spots a new pattern, translating that insight into a detection rule requires developer time. With AI, that translation can happen in minutes.

McKenna describes using Claude's analysis features to process years of FinCEN data in under 90 seconds, producing charts, trend analysis, and written insights that would have taken days to compile manually.

In about 60 seconds, you get something that probably would have taken one or two days for a person to do. Not necessarily that you take this and just publish it out there, but you can use it as a basis of understanding and then massage it into whatever analysis you're going to do.

Frank McKenna — Chief Fraud Strategist, Point Predictive

The same AI capabilities can also help fraud teams work smarter.

At the same time, the stereotype of the risk officer as a conservative gatekeeper is also fading as a new generation of risk leaders is leaning into technology:

There's a theme amongst the perception of a risk officer that risk officers typically are a little behind the times and maybe don't lean into tools. The reality is that risk officers now tend to be much more proactive. We see the opportunity to say, how do we use modern technology to potentially offer an opportunity to advance ourselves and learn different things?

Michelle Prohaska — Chief Banking and Risk Officer, Nymbus

Human-AI collaboration becomes essential

The most effective fraud teams are not choosing between humans and AI. They are combining them.

AI handles what machines do well: processing large volumes of documents, detecting patterns across millions of data points, and flagging anomalies that would be invisible to human review. Humans handle what people do well: exercising judgment in ambiguous situations, building relationships with customers, and adapting to novel fraud schemes that do not match existing patterns.

This shift changes what it means to be a fraud analyst. Instead of spending hours on manual document review, analysts become orchestrators of AI systems, stepping in when judgment is required and letting automation handle the rest.

I don't think AI is going to replace fraud analysts at all. I think it'll change what fraud analysts do. I think it'll enhance what fraud analysts do. But I think that you're always going to have the human in the loop.

Frank McKenna — Chief Fraud Strategist, Point Predictive

The goal is not to remove humans from the process. It is to free them from routine work so they can focus on what matters most.

Jen Lamont agrees, pointing to something machines cannot replicate.

AI is never gonna have empathy. We need both in order to have a truly effective approach to fighting fraud. It has to be a dynamic approach.

Jen Lamont — BSA Compliance Officer & Fraud Manager, America's Credit Union

The winning formula is not AI alone. It is AI integrated thoughtfully into a broader fraud program, with humans providing oversight, judgment, and the relationship skills that machines cannot offer.

Hailey Windham puts it simply: manual review alone is no longer sufficient.

You cannot replace a fraud fighter with technology and fraud fighters today can’t do this job without tech either. You need both working together if you actually want to be effective.

Hailey Windham — Community Banking Lead, Sardine

“Swiss cheese strategy” meets fraud detection

The organizations with the strongest fraud programs use multiple layers of detection, each designed to catch what the others might miss.

Security professionals call this the "Swiss cheese model." Each layer of defense has gaps — like holes in a slice of cheese. No single layer stops everything. But stack enough slices together, and the holes stop aligning.

Here's how that looks in practice:

A fraudster who slips through document verification might get flagged by device intelligence. One who passes identity checks might trigger a behavioral anomaly. The power is in the combination.

We use several systems and processes to vet our incoming members. When we get alerts from initial checks, that leads us down the path of requesting more documentation such as utility bills or lease agreements to verify proof of residence. We can stop the fraud from even getting in the front door.

Matt Overin — Manager of Risk Management, Logix FCU

At Nymbus, layered detection includes behavioral signals captured before a transaction even occurs. Michelle Prohaska describes how their fraud interdiction partner adds a critical layer of visibility.

We use Datavisor to collect both biometric and device data about how people log in. It can detect bot activities like are you moving your mouse in the way that a human would, or the way that a bot would? Are you copying and pasting information? What's the speed at which you typed or responded to things? You can pick up a lot of information just from the start of an experience.

Michelle Prohaska — Chief Banking and Risk Officer, Nymbus

Angela Diaz emphasizes that layering must be thoughtful, not just additive.

We can't skip the part where we look at what we've done historically, what's in place internally and foundationally, and what tweaks may need to happen there in order to strengthen those controls, rules, alerts, models. Otherwise, we will not be actually solving this in the most effective way possible. Layering on tools that are great, but that don't align with the way that these scams are actually showing up for us.

Angela Diaz — Senior Principal of Operational Risk Management, Discover

The goal is to build a system where each layer reinforces the others and gaps in one are covered by another.

Collaboration and community act as force multipliers

Fraudsters are organized. On Reddit, the subreddit r/IllegalLifeProTips amassed nearly one million members exchanging tips on everything from shoplifting to document fraud. While the community framed posts as "hypothetical" and "for entertainment purposes only," the advice was specific and actionable.

One user advised: “Download your most current [statement] and load it into Adobe Acrobat. Redact literally everything except your name, the dates, and your direct deposit that month, make sure you edit the amount to match your pay stub. Remember, fonts will [get] you. Use Matcherator or Font Squirrel to identify any fonts in the document and download and install them before you get started.”

Reddit has since banned the community. But the pattern is clear: when one forum shuts down, another emerges. The knowledge has already spread.

The most effective fraud teams have learned to do the same.

What gives me hope is the passion I’m seeing from fraud fighters right now. People aren’t afraid to reach out anymore. There’s crossover, collaboration, a feeling of true community because we all know this is the only way we have a shot at winning.

Hailey Windham — Community Banking Lead, Sardine

This kind of sharing creates network effects. When one institution spots a new fraud pattern, others can defend against it before they are hit. A great example is the annual Fraud Round Table hosted by the account protection team at BCU.

The account protection team at BCU hosts an annual Fraud Round Table for hundreds of credit union attendees to come together and learn from one another. They celebrated their 11th year of the event in September of 2025 (pictured below), hosting hundreds of fellow credit unions virtually and in-person.

Rapid Finance has built a culture of openness around fraud detection.

We openly talk about fraud. All of our sales reps, all of our underwriters, they've had sessions in terms of what these results mean. And we even have dedicated Slack channels that they can reach out to the experts on it. We're openly talking about it. We're not having to hide behind some sort of wall. We're willing to talk about fraud and the hits and the misses out in the open as an organization.

Patrick Lord — Senior Project Manager, Rapid Finance

Beyond individual institutions, the fraud fighting community has built its own infrastructure for sharing knowledge. Frank McKenna's newsletter Frank on Fraud has become a go-to resource for staying current on emerging schemes and industry trends. Events like Fraud Fight Club from the team at About Fraud bring practitioners together for candid, off-the-record conversations about what's working and what isn't.

Inscribe's podcast, Good Question, brings together fraud and risk leaders to discuss the big questions shaping the future of fraud, AI, and trust.

Recent episodes have featured practitioners from organizations quoted in this report, including how Coast is evolving their fraud strategy in the AI age and how Rapid Finance is catching deepfake documents by redesigning their underwriting workflow.

These practitioner-to-practitioner exchanges give fraud fighters a space to speak openly about challenges, compare notes on detection strategies, and build the relationships that make real-time intelligence sharing possible.

Jen Lamont sees education and collaboration as inseparable.

It's so important that we stay up to date on what's going on, and that's not always possible in our industry because it's so fast-paced. So having different team members following different groups and participating in different networking events and different conversations, and then coming back and sharing it with the team, it has made all the difference in the world to our investigation.

Jen Lamont — BSA Compliance Officer & Fraud Manager, America's Credit Union

Winning Strategies

AI amplifies human judgment

The most effective teams pair AI-driven detection with human decisioning. Automation handles scale and pattern detection; humans resolve ambiguity and edge cases.

Layered defenses outperform single controls

Document verification works best as one signal among many. Identity, device, behavioral, and network signals catch what any single layer misses.

Shared intelligence strengthens defenses

Fraudsters collaborate openly. Teams that share patterns internally and across institutions adapt faster and detect emerging threats earlier.

Culture drives outcomes

Organizations that openly discuss fraud patterns, hits, and misses identify issues sooner and respond more effectively.

Conclusion

Document fraud in 2025 was not defined by a single tactic or technology, but by scale, accessibility, and speed. Generative AI has lowered the barrier to creating convincing fakes. Template marketplaces have industrialized reuse. And shared knowledge (among both fraudsters and fraud fighters) determines how quickly new techniques spread.

The result is not just more document fraud, but earlier and quieter fraud. Manipulated documents now surface upstream, before transactional signals fire. Manual review processes that once worked at lower volumes are increasingly overwhelmed. The cost is not only fraud losses, but slower decisions, growing backlogs, and customer friction that compounds over time.

But this research also shows a clear countertrend. The most effective fraud teams are taking decisive action:

- Redesigning how fraud work gets done

- Using AI to handle volume, surface risk signals, and reduce review time while keeping human judgment where it matters most

- Layering defenses intentionally, integrating signals rather than accumulating tools

- Sharing intelligence across teams and institutions to shorten the time it takes to recognize and respond to new patterns

The path forward is not about replacing people with technology, nor about reacting to AI-driven fraud with fear. It's about building scalable, resilient fraud programs that protect good customers without exhausting fraud teams.

In an environment where fraud tactics scale quickly, inaction compounds faster. The organizations that invest now position themselves not just to withstand today's threats, but to operate with confidence as the landscape continues to evolve.

Fraud has moved from cut-and-paste to cut-and-code. The good news is that the same AI driving this threat can also be the solution. What matters now is how quickly institutions adopt systems that adapt as fast as fraud evolves.

Methodology

This report draws on three primary sources:

Inscribe Network Data (January through November 2025): Detection data from millions of documents processed across hundreds of banks, credit unions, fintechs, and lenders using the Inscribe platform.

Inscribe Fraud Fighter Survey (November and December 2025): Survey responses from 90 fraud and risk leaders across the financial services industry.

Practitioner Interviews (2025): Conversations with fraud and risk leaders including:

- Michelle Prohaska, Chief Banking and Risk Officer, Nymbus

- Anurag Puranik, Chief Risk Officer, Coast

- Patrick Lord, Senior Project Manager, Rapid Finance

- Timothy O'Rear, Senior Underwriter, Rapid Finance

- Angela Diaz, Senior Principal of Operational Risk Management, Discover

- Michael Coomer, Director of Fraud Management, BHG Financial

- Matt Overin, Manager of Risk Management, Logix Federal Credit Union

- Frank McKenna, Chief Fraud Strategist, Point Predictive

- Hailey Windham, Community Banking Lead, Sardine

- Jen Lamont, BSA Compliance Officer & Fraud Manager, America's Credit Union

- Nickie Christianson, Senior Manager, Account Protection Team, BCU

- Tyler Davenport, Investigator, Account Protection Team, BCU

Additional data and analysis drawn from Inscribe blog posts and research published throughout 2025.

For more information about Inscribe's document fraud detection capabilities, visit inscribe.ai or contact our team to schedule a conversation.

FAQs

How common is document fraud?

In 2025, approximately 6% of all documents processed across Inscribe's network were flagged as fraudulent — roughly 1 in 16 documents showing signs of manipulation, fabrication, or misrepresentation. For an organization processing 10,000 loan applications annually with three documents each, that translates to over 1,800 potentially fraudulent documents per year.

Which documents are most targeted by fraudsters?

Bank statements are the top concern among fraud and risk leaders, with 85.56% citing them as the most vulnerable document type. Pay stubs and business financial documents rank second and third. Utility bills show the highest fraud rate in detection data, likely because they're perceived as lower-risk and receive less scrutiny during review.

How is AI changing document fraud?

AI has transformed document fraud in two ways. Fraudsters now use generative AI to create convincing fake documents from scratch or edit legitimate documents with precision that defeats visual inspection. Detected AI-generated document fraud increased nearly 5x between April and December 2025. At the same time, 97.78% of fraud leaders express concern about AI-enabled fraud, recognizing it as an immediate operational threat.

What types of edits do fraudsters make to documents?

Over 90% of flagged documents include altered financial details — either alone or combined with identity changes. This includes manipulated income figures, edited transaction amounts, and fabricated account balances. The dominance of financial manipulation cuts across first-party, third-party, and synthetic identity fraud categories.

What are document fraud templates?

Document fraud templates are pre-made, editable files sold on online marketplaces for as little as $10. They allow anyone to create fake bank statements, pay stubs, and utility bills without technical skills. In 2025, 1 in 5 flagged documents showed signs of template-based manipulation — up from 1 in 14 in 2024.

Can AI detect fraudulent documents?

Yes. AI-powered detection analyzes documents for manipulation patterns, metadata artifacts, financial inconsistencies, and cross-document signals invisible to manual review. Organizations using AI detection have reported preventing millions in fraud losses while reducing document review time by up to 99%.

What strategies work best against document fraud?

The most effective fraud programs combine four elements: AI-powered detection to handle volume and surface hidden signals, human expertise for complex investigations and judgment calls, layered defenses integrating document verification with identity and behavioral signals, and industry collaboration to share intelligence about emerging patterns.

What is the methodology behind this report?

This report synthesizes three sources: detection data from millions of documents processed across hundreds of financial institutions in 2025, survey responses from 90 fraud and risk leaders collected in November and December 2025, and interviews with 15 practitioners including chief risk officers, fraud managers, and industry experts.

What will our AI Agents find in your documents?

Start your free trial to catch more fraud, faster.